Overview

Foucault covers the subjects of torture, punishment, discipline, and surveillance in this important book. It tends to work as a history, originating in the 1750s, and covering the matter of punishment until the 1850s or so. Foucault is writing in the 1970s, when the matter of public surveillance was becoming an issue in England, and possibly among other places, so this may have been an influence in his approach. The historical change from 1750 to 1850 is the disappearance of torture and the transition of the object of punishment and discipline from the corporeal body to the soul. Along side this, is the emergence of a technology of discipline and power, which is constructed a self-observing, self-disciplining society.

The most relevant bit in this is the progressive disembodiment of punishment, and the idea of the carcreral society, which to some may be a utopian society, which is totally contained within its frame of ideology. This is very reminiscent of the enclosing capacities of simulation. A simulation limits everything it represents to that which is definable by its model, and a carceral society willfully enforces its ideology through discipline and surveillance. Resistance to a discourse is still a part of that discourse.

Notes

The Body of the Condemned

Pain and spectacle: Over time, these disappear in punishment. The reform moves the body to become out of bounds as the receptor of punishment. Instead, the body becomes something to be constrained, obliged, and prohibited. As evidence of the change of the reform, Malby writes that punishment should strike the soul, not the body. (p. 11)

The aim of the book is to understand soul, judgment, and power. Power occurs in a political economy over the body. The image of the economy is very prevalent through Foucault, its emergence is reflective of the period of mercantilism that has emerged prior to the reform movement. Economy implies a regular system of exchanges, and an uneven distribution of capital. (p. 23)

Common thought of the time: The body is the prison of the soul, which is very reflective of the prevalent dualism of this period of time. Despite the arguable humanity of the new prison system, revolts occur within the modern system, protesting the situation of the prisoners. The new system is reflective of a new technology of power. (p. 31)

The Spectacle of the Scaffold

Torture is a means of inscribing, by pain, the truth of a crime on a criminal. It is by nature spectacular. The issue of truth of a crime becomes significant later. Torture also serves as a ritual, a symbolic means of formalizing the law in the minds of people as a cultural practice. (p. 35)

The power relationship between the condemned and the sovereign: In a society with a sovereign, the state is equated to the body of the king. The criminal is one who attempts to assert an unauthorized power, which is thus a bodily assault on the authority of the king. The punishment deprives the criminal of power, and visibly enforces the power of the sovereign. In the spectacle of torture, the spectators are witnesses and consumers of the event. (p. 54)

Generalized Punishment

Punishment is an expression of the universal will of the state. The reform movement attempts to challenge the use of punishment as vengeance, pushing for punishment without torture. (p. 74)

There is an economy of punishment that reflects an economy of power. At the center of the reform is an attempt to undermine the centrality of the power of the monarch, around whom was spread a “bad economy of power”. The reformers are attempting to establish a right to punish without the authority of the sovereign. (p. 79)

Illegality also forms an economy, and was widely employed as a social practice. This derives from a general non-observance or abeyance of the law. The illegality is a necessary component within the society, but forms an odd paradox when compared to the criminal. Those who practiced illegality with violence or hurt the general population were scandalized, but general illegality (particularly theft) was widely accepted. Around this practice formed a network of glorification and blame. Thus there was a level of obligation and social custom that operated in spite of the law. (p. 83)

Illegality of property was generally exercised by the lower classes in rampant theft. There was also an illegality of rights practiced by the merchant classes. This represents the change in the economy of illegality associated with the rise of capitalism. The rampant illegality essentially resembles social tactics without a strategy to hold it at bay. Punishment reform is a strategy for a new social system. (p. 87)

The new economy of punishment is based on the concept of the social contract. Criminality in that sense is inherently paradoxical: the criminal is both an enemy of and a member of society. This change is an enormous shift from the authority of the sovereign in torture. Thus the criminal is a traitor to the state. (p. 90)

The change in punishment was reflected by an intense level of calculation and determination of the principles of just and correct punishment. (p. 94) The result of this is a new calculated economy of power disguised as mercy. But, the object of power is no longer the body, but the mind or character. (p. 101) Foucault cites Servan on the next page: “A stupid despot may constrain his slaves with iron chains; but a true politician binds them even more strongly by the chain of their own ideas; it is at the stable point of reason that he secures the end of the chain; this link is all the stronger in that we do not know what it is made of and we believe it to be our own work; …” (p. 102-103) ref (Servan, 35)

The Gentle Way in Punishment

Forces, attractions, and values: Follows ideas of compulsion, attraction and repulsion stem from a Newtonain metaphor. Also heavy into this theme of punishment is the idea that the state is a natural phenomenon, and crime is distinctly unnatural. This flies directly in the face of illegality as a common cultural practice. Essentially, it is an ideological strategy to combat an emergent tactic. (p. 104)

In regards to the vision of a “just society”: Law attempts to counter the historical tradition of the affairs of criminals of old being celebrated in culture and tales. A glorification of outlaws and lawbreakers is very prevalent in many cultures. The vision of the just society aims to replace that with a reverence for the austerity of the law, and have a distinct openness in the city. Everywhere within the just city is the influence and inscription of the law. And education is meant to describe and glorify the law as well. This vision paves the way for the carceral society to come. (p. 113)

Docile Bodies

In regards to discipline, Foucault looks at the soldier, which is constructed as a product of molding via discipline. This, again, treats the body as an object of operation, it deconstructs the body into various independent components. The aim is to shape each force of the body: maximize those forces that yield utility, and minimize other forces that the body might be obedient. (p. 137)

Discipline is the methodical mastery over little things. Its aim is to spread central control to every minutia of the body of a subject, while needing to expend a minimal effort to control these bodies. This echoes again the idea of a technology of power: to distribute and maximize optimally. Discipline is in a sense, the antithesis of emergence. Also, discipline resembles the way that people interact with machines and computers, through working with them, they make humans further like machines. These can be connected through Marx and Weizenbaum.

The Means of Correct Training

A precursor to panopticism: Surveilance is a requirement for discipline. The purpose of discipline is to train, but for what? (p. 173)

Discipline is a normalizing process: It punishes and rewards for established social formations, attempts to make even that which is uneven. This is reminiscent of role gratification, performance of a role is met with rewards and gratification, but failure is met with lack of support. Role learning is a disciplinary process. Examination is described here as a ritualized interaction, and involves a presentation of self. A component of discipline is being subject to examination and gaze. (p. 184)

Panopticisim

The chaos of the plague is met with a focused ordering of life: sectioning, visibility, and isolation. The physical corporeality of bodies is mixed with the ideas of sickness and evil. (p. 197)

In the Panopticon: There is a dissociating of the visibility dyad. An automatization and disindividualization of power. Power exists, but and it exists in the minds of subjects, without necessarily a physical presence to enforce that power. Cells are transformed into stages, where actors compelled to constantly be performers. Allows for an individuality of the prisoners, though. (p. 203) Enables a laboratory of power, whereby the authority may conduct experiments and tests, (developing technology and improving efficiency of power) on the distributed system of the panopticon.

Society has changed from that of a spectacle to that of the Panopticon. Life is longer like an amphitheatre, but we are still performers, watching each other. (consistent w Goffman?) Panopticism is a power technology to improve the efficiency of power. (p. 217)

The object of justice transforms from the physical body, and away from the contractual one, but towards a new thing, a “disciplinary body”. This body can be deconstructed into its component parts and each may be operated on and molded, “corrected” independently. (p. 227)

Panoptic society has surveillance, and does not enable individuals access to the information being stored about them. When these are exposed (eg, wiretapping) popular reaction opposes the system and there is outrage. The problem is that, with the dissociation of the gaze, the individual has no ability to understand how he is being seen. More than knowledge is necessary to topple the system, though. Individual is reduced to pieces and surveilled, but has no independent power in understanding how he is dissected, and no understanding of what is found in there. Thus, to successfully resist the pantoptic society, one must have full self knowledge, because that cannot be taken away.

Complete and Austere Institutions

With isolation, the matter of the self and conscience come into play. Prison coerces order and social rules by replacing society at some levels. Those who designed prisons aimed to have the prison serve as a reduced society (a sub-simulation) where the minimal elements of society were still present, but prisoners would be isolated or prohibited from interacting with each other regularly. (p. 239) Prison life is hardly reflective of the outside, though. What happens to self and performance when the subjects are in total isolation? Society hinges on performance and interaction, what happens when one or both are deprived?

The prison offers a substitution of the offender to the delinquent. This allows an individuality, total knowing, and potential reformability to the criminal. A lot of the philosophy justifying prisons is rooted in the correctability of criminals and justification of the law. Also this changes to demand a total knowledge of the subject. (p. 251)

Illegalities and Delinquency

The prison produces and encourages delinquency. It encourages a loyalty amongst prisoners, and promotes the idea of warders as unauthorized to correct, train, or provide guidance. The focus here is the failure of the prison to perform a corrective function. The reason for this failure involves the cultural foundation of illegality. (p. 267)

The Carceral

Foucault opens the final chapter by discussing a colony, which becomes the example of a contained carceral society. The role of instructors, (not educators) in direct development is to impose morals and encourage subjects to be docile and capable. There is a direct reference to Plato’s Repbulic: children in the colony were taught music and gymnastics. The colony also has a circularity: Instructors are subjects as well. This leads to a closedness of the social model. (p. 294)

More on the enclosed and contained nature of the carceral: Like a closed simulation, the carceral society must contain every projection of things within its model. (It must be mathematically complete). What of the simulation outlaw? The utopia encodes the law into society, so in its simulation, the outlaw is an impossibility, fundamentally and intrinsically unexplainable. (p. 301)

The carceral relates very closely to simulation, even in the Baudriallardian sense: Simulacra encloses and defines the carceral society via its isolation of law and ideas. But it id not really law, but ideology. An open question is who is behind it? It may be that no one is, the order of the society falls towards infinite regress. But, in reality, laws are made, and simulations are defined. (p. 308) Foucault’s history is sort of anti-narrative. So, while power exists, Foucault is reluctant to name individuals or events behind the application of power. It makes a disconcerting approach, leaving the reader wondering why or how the state of affairs is the way that it is. An extreme approach is to claim that power is totally self-generating, and indeed, in the carceral society, it is, but there still must be agents behind any change.

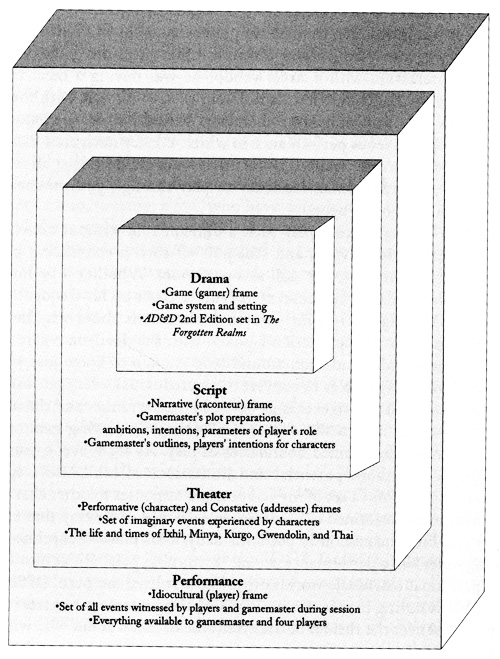

Connecting Schechner on performance: Rules guide a performance through constraint, creating safety and security. Note that this is entirely consistent with conversation with Miashara earlier. The RPG narrative is created by performance. This is interesting to compare with other game studies, the relationship between performance and play. The difference between Schechner and the RPG model has to do with the code that defines the performance: “The role-playing game exhibits a narrative, but this narrative does not exist until the actual performance. It exists during every role-playing game episode, either as a memory or as an actual written transcription by the players or game master. It includes all the events that take place in character, nonplayed character backstories, and the preplayed world history. It never exists as a code independent of any and all transmitters, like Schechner’s definition for drama suggests.” (p. 50)

Connecting Schechner on performance: Rules guide a performance through constraint, creating safety and security. Note that this is entirely consistent with conversation with Miashara earlier. The RPG narrative is created by performance. This is interesting to compare with other game studies, the relationship between performance and play. The difference between Schechner and the RPG model has to do with the code that defines the performance: “The role-playing game exhibits a narrative, but this narrative does not exist until the actual performance. It exists during every role-playing game episode, either as a memory or as an actual written transcription by the players or game master. It includes all the events that take place in character, nonplayed character backstories, and the preplayed world history. It never exists as a code independent of any and all transmitters, like Schechner’s definition for drama suggests.” (p. 50)